Audio-driven Neural Gesture Reenactment with Video Motion Graphs

CVPR 2022

Abstract

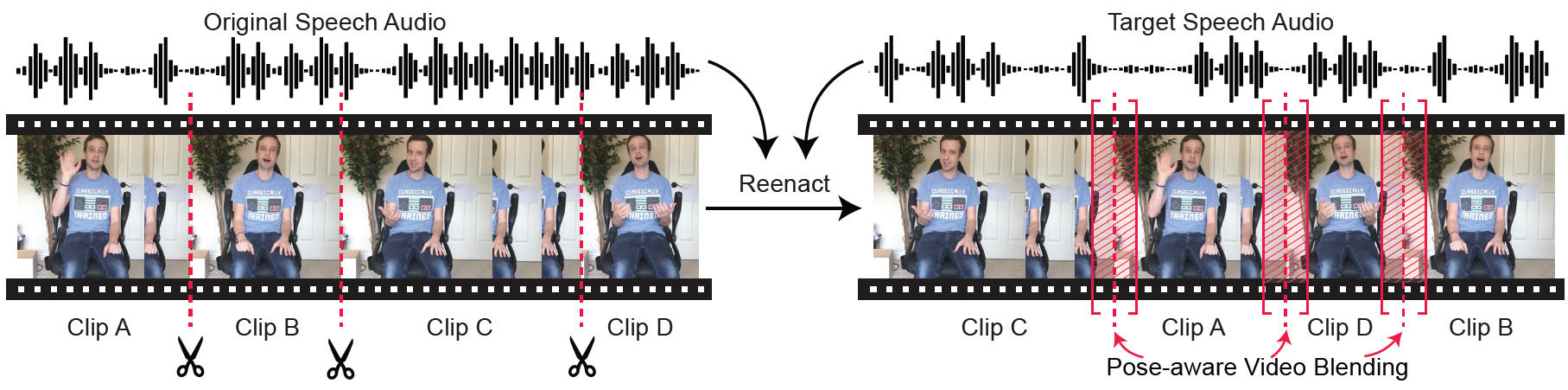

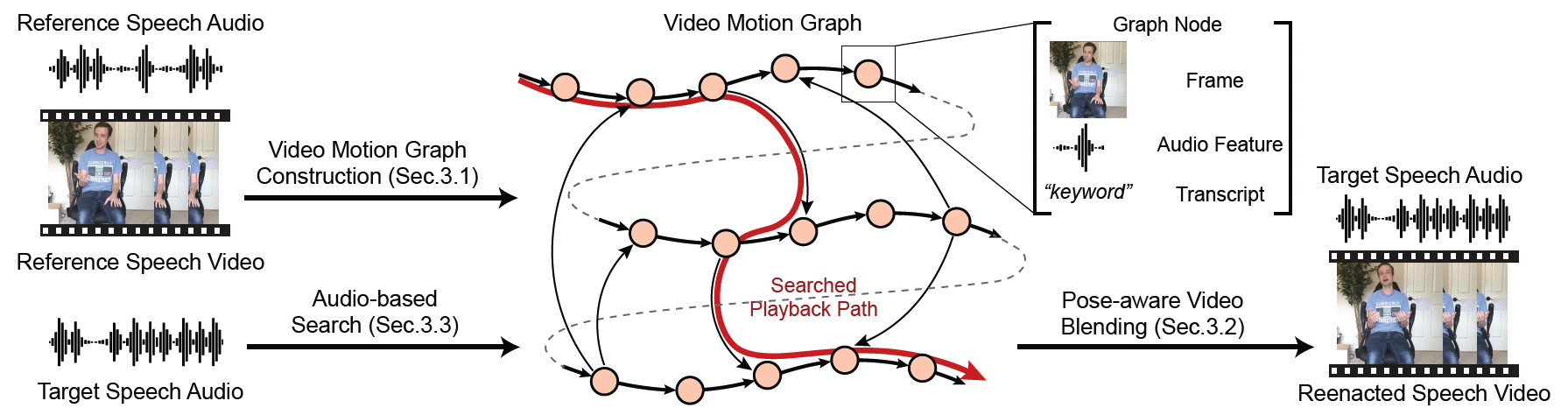

Human speech is often accompanied by body gestures including arm and hand gestures. We present a method that reenacts a high-quality video with gestures matching a target speech audio. The key idea of our method is to split and re-assemble clips from a reference video through a novel video motion graph encoding valid transitions between clips. To seamlessly connect different clips in the reenactment, we propose a pose-aware video blending network which synthesizes video frames around the stitched frames between two clips. Moreover, we developed an audio-based gesture searching algorithm to find the optimal order of the reenacted frames. Our system generates reenactments that are consistent with both the audio rhythms and the speech content. We evaluate our synthesized video quality quantitatively, qualitatively, and with user studies, demonstrating that our method produces videos of much higher quality and consistency with the target audio compared to previous work and baselines.

System overview. The reference video is first encoded into a directed graph where nodes represent video frames and audio features, and edges represent transitions. The transitions include original ones between consecutive reference frames, and synthetic ones between disjoint frames. Given a unseen target audio at test time, a beam search algorithm finds plausible playback paths such that gestures best match the target speech audio. Synthetic transitions along disjoint frames are neurally blended to achieve temporal consistency.

1. Reenacted Video Results | |||||||||||

Example 1: our system reenacts the reference video with target speech audio. | |||||||||||

| + | = | ||||||||||

| Reference Video - Speaker A (a short sample clip) |

Target Audio - Speaker B | Reenacted Video - A's appearance + B's voice | |||||||||

Example 2: reenacted results on TED-talks speaker. | |||||||||||

| + | = | ||||||||||

| Reference Video - Speaker II (a short sample clip) |

Target Audio | Reenacted Video - Reenacted Speaker II's video | |||||||||

2. Our Blended Playback vs. Direct PlaybackWe show the GIF result for Fig.3 in the main paper (played in 3x slow motion). | |||||||||||

|

|

→ |

|

|

|||||||

| Input Sequence 1 | Input Sequence 2 |

Direct Playback

(notice the temporal jitter in the middle) |

Blended Playback

(ours seamless blended result) |

||||||||

|

Another example on TED-talks dataset. | |||||||||||

|

|

→ |

|

|

|||||||

| Input Sequence 1 | Input Sequence 2 |

Direct Playback

(notice the temporal jitter in the middle) |

Blended Playback

(ours seamless blended result) |

||||||||

3. Alternative Transitions on Video Motion GraphGiven the same input sequence, we show alternative transitions searched by proposed video motion graph (see Sec. 3.1). | |||||||||||

|

|||||||||||

| Same Input Sequence A | Searched Alternative Sequence B1 | Our Synthesized Blended Playback A → B1 |

|||||||||

|

|||||||||||

| Same Input Sequence A | Searched Alternative Sequence B2 | Our Synthesized Blended Playback A → B2 |

|||||||||

4. Alternative Reenacted Results from Beam Search | |||||||||||

Given a reference video and a target audio, our system can synthesize alternative reenacted results from different paths in the motion graph. Specifically, we select the most plausible K paths found by beam search described in Section 3.3. | |||||||||||

|

Reference Video

Reference Audio

|

→ |

Result from Searched Path 1

Result from Searched Path 4

|

Result from Searched Path 2

Result from Searched Path 5

|

Result from Searched Path 3

Result from Searched Path 6

|

|||||||

5. Comparison: Ours vs. GT | |||||||||||||

|

Below we show several videos either from our reenacted results or ground-truth videos. | |||||||||||||

↑ Click to show answer! ↑ |

↑ Click to show answer! ↑ |

↑ Click to show answer! ↑ |

|||||||||||

↑ Click to show answer! ↑ |

↑ Click to show answer! ↑ |

↑ Click to show answer! ↑ |

|||||||||||

↑ Click to show answer! ↑ |

↑ Click to show answer! ↑ |

↑ Click to show answer! ↑ |

|||||||||||

6. Comparison: Ours vs. Other SOTA Methods for Video Synthesis Results | |||||||||||

Here we show the video results for Fig.6 in the main paper. The videos have no sound. | |||||||||||

| ↓ GT ↓ | ↓ FeatureFlow[25] ↓ | ↓ SuperSlMo[32] ↓ | |||||||||

| Example 1 | |||||||||||

| ↑ Ours ↑ | ↑ vUnet[22] ↑ | ↑ EBDance[16] ↑ | |||||||||

| ‑ | |||||||||||

| ↓ GT ↓ | ↓ FeatureFlow[25] ↓ | ↓ SuperSlMo[32] ↓ | |||||||||

| Example 2 | |||||||||||

| ↑ Ours ↑ | ↑ vUnet[22] ↑ | ↑ EBDance[16] ↑ | |||||||||

7. Comparison: Blended Playback by Ours vs. Other SOTA Methods | |||||||||||||||

We compare our pose-aware blending network and other state-of-the-art methods for synthesizing blended frames around transition points (shown in GIF results played in 3x slow motion). | |||||||||||||||

Input Sequence 1

Input Sequence 2

|

→ |

FeatureFlow[25]

vUnet[22]

|

SuperSlMo[32]

EBDance[16]

|

Ours

|

|||||||||||

8. LimitationOur video blending network can blend the foreground human poses and slight background changes, but it fails on considerably changed backgrounds. | |||||||||||

|

|

→ |

|

||||||||

| Input Sequence 1 | Input Sequence 2 |

Blended Playback

Limitation: background cannot be blended smoothly when it changes considerably. |

|||||||||

Besides, our video blending results are also affected by the off-the-shelf motion capture method [72]. If the captured SMPL model is not accurate, e.g. the limbs, fingers are not well-aligned with image, our blending network which utilizes the foreground image warping based on the captured mesh may not achieve the best performance. | |||||||||||

Paper

VideoReenact.pdf, 9.4MB

VideoReenact_Supp.pdf, 9.4MB

Citation

Yang Zhou, Jimei Yang, Dingzeyu Li, Jun Saito, Deepali Aneja, Evangelos Kalogerakis, "Audio-driven Neural Gesture Reenactment with Video Motion Graphs", CVPR 2022

Video

Coming soon.

Presentation

Coming soon!

Source Code & Data

Coming soon!

References[16] Caroline Chan, Shiry Ginosar, Tinghui Zhou, and Alexei A Efros. Everybody dance now. In Proc. ICCV, 2019. [22] Patrick Esser, Ekaterina Sutter, and Björn Ommer. A variational u-net for conditional appearance and shape generation. In Proc. CVPR, 2018. [25] Shurui Gui, Chaoyue Wang, Qihua Chen, and Dacheng Tao. Featureflow: robust video interpolation via structure-to-texture generation. In Proc. CVPR, 2020. [32] Huaizu Jiang, Deqing Sun, Varun Jampani, Ming-Hsuan Yang, Erik Learned-Miller, and Jan Kautz. Super slomo: High quality estimation of multiple intermediate frames for video interpolation. In Proc. CVPR, 2018. [72] Donglai Xiang, Hanbyul Joo, and Yaser Sheikh. Monocular total capture: Posing face, body, and hands in the wild. In Proc. CVPR, 2019. **Note: reference indices are same to the ones used in the main paper. |

Acknowledgements

We acknowledge support from NSF (EAGER-1942069) and Adobe.